spARO volunteers are submitting Research News highlights about recent articles published in JARO, our society's journal. If you are interested in joining this effort, please complete the following survey- LINKED HERE! For submission guidelines, please click here.

RESEARCH NEWS HIGHLIGHTS:

Otoconia Structure After Short and Long Duration Exposure to Altered Gravity Boyle, R., Varelas, J. JARO (2021) 22: 509-525. https://doi.org/10.1007/s10162-021-00791-6

Reported by: Pavan S. Krishnan, B.A., Johns Hopkins University, Department of Otolaryngology-Head & Neck

Otoconia are crystalline deposits of calcium carbonate in the otolith organs, which serve as inertial force sensors for head translation and tilt relative to gravitational forces. These deposits are not structurally inert. There is relatively little known about the mechanisms of otoconia remodeling in the setting of altered gravitational forces. The authors of this study set out to determine the effects of varying intensity and duration of altered gravity on otoconial integrity by exposing mice to near-weightlessness, microgravity, or hyper-gravity environments in different time frames. Group 1 was composed of 3 male mice (2 wildtype, 1 transgenic C57Bl/10 J/PTN-Tg) that experienced 90 days of near-weightlessness in low Earth orbit on the International Space Station. The transgenic mice overexpressed pleiotrophin, a cytokine related to bone formation, under the control of bone-specific promoter PTN-Tg. Group 2 was composed of 4 female mice that experienced 13 days of micro-gravity on NASA shuttle orbiters. Group 3 was composed of 3 male mice that experienced hindlimb unloading (a model for micro-gravity) for 90 days. Group 4 was composed of 4 male mice that experienced centrifugation amounting to 2.38g for 3 months. Controls were corresponding flight habitat and standard cage vivarium mice. Scanning electron microscopy (SEM) imaging of otoconia provided topographical imaging and superficial analysis while SEM-imaging of focused ion beam (FIB)-milled individual otoconia allowed for characterization of microstructures and evaluation of deposition or ablation of otoconia. Of note, this study was part of a tissue-sharing program therefore the gravity conditions could not be altered by the authors. Inspection of Group 1 mice otoconia showed evidence of superficial layering on the outer surface, though not clearly distinct in the transgenic mice. No topographic differences were noticed in otoconia among Group 2 and 3 mice. Group 4 mice otoconia showed alterations in the normal-condition hexagonal shape to a deformed “dumbbell”-like appearance with hexagonal endings unusually exposed. With FIB milling, authors observed extreme cavitations within the inner core. These findings indicate that otoconia structure is susceptible to altered gravity, with possibly constructive processes occurring with decreased gravity and possibly destructive processes with hyper-gravity. Under challenges to re-establish normal function, is the otolith system modulated by an internal gravity sensor? Though descriptive in nature, this study serves as an important steppingstone to quantify onset and magnitude in otoconial changes and functional impacts. Ultimately, future studies should be conducted to elucidate the basic mechanism of otolith organ alterations, relating to endolymph chemistry and calcium remodeling.

Systemic Fluorescent Gentamicin Enters Neonatal Mouse Hair Cells Predominantly Through Sensory Mechanoelectrical Transduction Channels

Ayane Makabe, Yoshiyuki Kawashima, Yuriko Sakamaki, Ayako Maruyama, Taro Fujikawa, Taku Ito, Kiyoto Kurima, Andrew J. Griffith, and Takeshi Tsutsumi

Makabe A., Y. Kawashima, Y. Sakamaki, A. Maruyama, T. Fujikawa, T. Ito, K. Kurima, A.J. Griffith & T. Tsutsumi. JARO (2020) 21: 127-149. doi: 10.1007/s10162-020-00746-3

Reported by: Zahra Sayyid, MD PhD, Johns Hopkins University, Department of Otolaryngology-Head and Neck Surgery

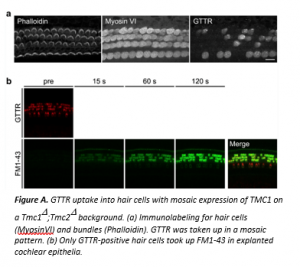

Aminoglycoside antibiotics are commonly prescribed worldwide, but they have known side effects of ototoxicity and nephrotoxicity. The primary known cause of the ototoxicity is the death (apoptosis) of inner ear hair cells, but the mechanism triggering this is unclear. Because damage to the inner ear is largely irreversible, aminoglycosides induce hearing loss. Aminoglycosides are polar compounds that cannot pass through the lipid-containing membranes of hair cells. Rather, they enter via endocytosis and/or via transmembrane ion channels such as sensory mechanoelectrical transduction (MET) channels. This study tested the hypothesis that systemically administered aminoglycosides enter hair cells primarily through sensory MET channels and not via other channels or endocytosis. Because transmembrane channel-like 1 (TMC1) and TMC2 are essential for sensory MET, the authors used mice lacking both genes (Tmc1D;Tmc2D mice) to model sensory MET-deficiency; these mice are called double-knockouts (DKO) here. Gentamicin was conjugated with Texas Red (GTTR) to follow its entry into hair cells. This compound was injected into mouse pups at postnatal day 4. Three hours later, the drug was taken up by hair cells from wild-type (WT) but not DKO mice, suggesting that TMC channel(s) are necessary for in vivo entry of gentamicin into hair cells.  The authors next examined the endocytic activity in DKO vs. WT hair cells in explanted cochlear epithelia. They incubated the tissues in culture medium with cationized ferritin so endocytic vesicles could be visualized by electron microscopy. Results showed that the DKO hair cells, like WT, retained apparently normal endocytic activity at their apical surfaces. The authors also performed an experiment to ask if GTTR uptake into hair cells was related to the lack of functional MET channels. They employed Tmc1::mCherry mice with a DKO background, which exhibit mosaic expression of the mCherry marker in hair cells. GTTR was injected into P4 mice. Although all hair cells were present with intact bundles 3 hours later, the distribution of GTTR-positive hair cells was mosaic: only GTTR-positive hair cells took up FM1-43 as a marker of functional MET channels (Figure A). In summary, gentamicin enters inner ear hair cells in vivo predominantly through sensory MET channels and not via endocytosis. These findings may prompt the development of future candidates to prevent ototoxicity, by searching for antagonists of sensory MET channels or by custom-designing aminoglycoside analogs that are unable to pass through MET channels.

The authors next examined the endocytic activity in DKO vs. WT hair cells in explanted cochlear epithelia. They incubated the tissues in culture medium with cationized ferritin so endocytic vesicles could be visualized by electron microscopy. Results showed that the DKO hair cells, like WT, retained apparently normal endocytic activity at their apical surfaces. The authors also performed an experiment to ask if GTTR uptake into hair cells was related to the lack of functional MET channels. They employed Tmc1::mCherry mice with a DKO background, which exhibit mosaic expression of the mCherry marker in hair cells. GTTR was injected into P4 mice. Although all hair cells were present with intact bundles 3 hours later, the distribution of GTTR-positive hair cells was mosaic: only GTTR-positive hair cells took up FM1-43 as a marker of functional MET channels (Figure A). In summary, gentamicin enters inner ear hair cells in vivo predominantly through sensory MET channels and not via endocytosis. These findings may prompt the development of future candidates to prevent ototoxicity, by searching for antagonists of sensory MET channels or by custom-designing aminoglycoside analogs that are unable to pass through MET channels.

A Model of Electrically Stimulated Auditory Nerve Fiber Responses with

Peripheral and Central Sites of Spike Generation

Suyash Narendra Joshi, Torsten Dau, Bastian Epp

Joshi, S.N., Dau, T. & Epp, B. JARO (2017) 18: 323.https://doi.org/10.1007/s10162-016-0608-2

Reported by: Chemay R. Shola, University of Washington, Department of Bioengineering

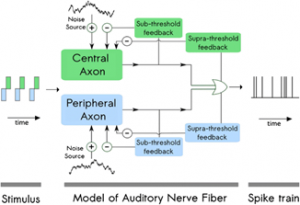

Cochlear implant (CI) users struggle to understand speech in noisy environments, leading researchers to seek solutions using signal processing methods. The so-called “envelopes” of speech sound packets can be extracted by the implant’s speech processor. Such envelopes can then used for temporal modulation of electrical pulses sent to the auditory nerve fibers (ANFs). This paper describes a computational model to predict ANF responses to electrical stimulation that takes into account where along an ANF the spike (or action potential) is generated. These findings may lead to the design of better CI stimulation paradigms that can improve the listening experience. Ideally, a computational model should mimic experimental findings of ANF firing patterns. For instance, both anodic and cathodic stimuli can generate a spike in the ANF. Current computational models cannot account for certain details of how ANFs respond to such features of current pulse shapes [1] or other stimulus parameters. Joshi and colleagues present a computational model that assumes ANF neurons have two sites of spike generation, named the peripheral and central axons, respectively (Figure A). Moreover, these two sites differ in their sensitivity to either cathodic or anodic charges. The peripheral axon and the central axon are set up as separate exponential integrate-and-fire point neuron models with two adaptive currents. This mathematical model has been determined to accurately describe the spike time behavior of the ANF [2, 3]. The differences for anodic and cathodic charges were based on parameters obtained using a monophasic pulse from feline data. Stochastic properties of ANF firing was included by introducing an independent noise source to the model. The dynamic nature of the ANF was accounted for by the subthreshold and suprathreshold adaptations by including two feedback loops that dynamically impact the membrane potential; the time constants of subthreshold and suprathreshold currents were adjusted to fit empirical data. Various single-pulse shapes were tested, as well as paired pulses and pulse trains. Neural responses characteristics from the model accurately mimicked experimental results for single axon responses. Other computational models use a parameter called activation time to model the difference between the thresholds for monophasic and biphasic pulses. In contrast, this model does not consider activation time; instead, the model charges the membrane voltage exponentially once it has crossed the threshold voltage. Inhibitory stimulation will cancel the spike if there is enough of it. Overall, the proposed model adds to a toolbox that can be used by investigators to investigate temporal coding in CI users.  Figure A. Structure of the proposed model. References (1) Joshi SN, T. Dau, B. Epp (2014). Modeling auditory nerve responses to electrical stimulation. In: Proceedings from Forum Acusticum (3) Brette R. and W. Gerstner (2005) Adaptive exponential integrate-andfire model as an effective description of neuronal activity. J Neurophysiol 94(5):3637–3642. doi:10.1152/jn.00686.2005 (2) Fourcaud-Trocmé N. , D. Hansel, C. Van Vreeswijk and N. Brunel (2003) How spike generation mechanisms determine the neuronal response to fluctuating inputs. J Neuroscience 23(37):11628–11640

Figure A. Structure of the proposed model. References (1) Joshi SN, T. Dau, B. Epp (2014). Modeling auditory nerve responses to electrical stimulation. In: Proceedings from Forum Acusticum (3) Brette R. and W. Gerstner (2005) Adaptive exponential integrate-andfire model as an effective description of neuronal activity. J Neurophysiol 94(5):3637–3642. doi:10.1152/jn.00686.2005 (2) Fourcaud-Trocmé N. , D. Hansel, C. Van Vreeswijk and N. Brunel (2003) How spike generation mechanisms determine the neuronal response to fluctuating inputs. J Neuroscience 23(37):11628–11640

November 2020

Human Click-Based Echolocation of Distance: Superfine Acuity and Dynamic Clicking Behaviour Lore Thaler, H. P. J. C. De Vos, Daniel Kish, Michail Antoniou, Christopher J. Baker & Maarten C.J. Hornikx

Thaler, L., H.P.J.C. De Vos, D. Kish, M. Antoniou, C.J. Baker & M.C.J., JARO (2019) 20: 499-510. https://doi.org/10.1007/s10162-019-00728-0

Reported by: Tammy Binh Pham, B.A. & Farhoud Faraji, M.D., Ph.D., University of California, San Diego, Department of Otolaryngology-Head and Neck Surgery

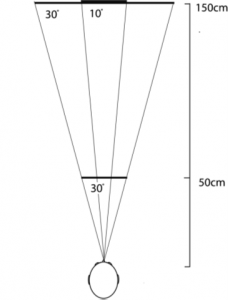

Echolocation – the acoustic method of sensing spatial information via reflections of self-made sounds – is an ability commonly associated with bats and marine mammals. This study by Thaler et al. adds to the growing body of research on the phenomenon of click-based echolocation among humans and provides insights into the adaptations that blind human echolocators may use to discriminate object size and distance. The study used a distance-discrimination task by blind individuals with experience in click-based echolocation (N=8). Depth perception acuity and clicking behaviors were evaluated. In each trial, participants 1) echolocated a reflector placed first at a reference distance and subsequently at a different test distance, and 2) were asked to determine whether the test distance was closer or farther relative to the reference distance. In each subsequent trial, the test distance approached the reference distance such that distance discrimination would become increasingly difficult. Figure 1 details the reflector sizes and distances used. The accuracy of the participant responses, as well as clicking behavior (measured with microphones mounted to the participant’s head) were recorded.  Fig. 1. Bird's-eye view of the experimental set-up. Reflectors (5 cm thick wood disks) were presented at either 50 cm (28.5 cm diameter disk only) or 150 cm (28.5 and 80 cm diameter disks). The acoustic projections of the larger disk at 150 cm and the smaller disk at 50 cm were 30°, while that of the smaller disk at 150 cm was only 10°. Reflectors were tested one at a time, and each reference distance was tested separately. The results showed that experienced echolocators were able to determine reflector distances with high acuity. They were able to detect changes of 3 cm at the 50-cm reference distance, and of 7 cm at the 150-cm reference distance, regardless of reflector size. Regarding clicking behavior, participants utilized more clicks for the smaller disk at 150 cm compared to at 50 cm, more clicks for the smaller disk at 150 cm compared to the larger disk at 150 cm, and no difference in number of clicks between the smaller disk at 50 cm and the larger disk at 150 cm. Furthermore, the intensity of clicks increased as a function of reflector distance, but did not differ between different reflector sizes at the same distance. These findings show that experienced echolocators change the number and intensity of clicks in response to changes in the echo-acoustic strength of the presented reflectors. In light of data showing that even individuals newly trained in echolocation were better able to detect and avoid obstacles when they used echolocation [1,2], the findings from this study may guide the development of echolocation training for blind individuals without previous echolocation experience, and furthermore have the potential to drive the development and tuning of assisted echolocation devices [3]. References 1. Kolarik AA-O, Scarfe AC, Moore BC, Pardhan S. Blindness enhances auditory obstacle circumvention: Assessing echolocation, sensory substitution, and visual-based navigation. PLoS One. 2017;12(4). 2. Thaler L, Zhang X, Antoniou M, Kish DC, Cowie D. The flexible action system: Click-based echolocation may replace certain visual functionality for adaptive walking. J Exp Psychol Hum Percept Perform. 2020;46(1):21-35. 3. Sohl-Dickstein J, Teng S, Gaub BM, et al. A Device for Human Ultrasonic Echolocation. IEEE Trans Biomed Eng. 2015;62(6):1526-1534.

Fig. 1. Bird's-eye view of the experimental set-up. Reflectors (5 cm thick wood disks) were presented at either 50 cm (28.5 cm diameter disk only) or 150 cm (28.5 and 80 cm diameter disks). The acoustic projections of the larger disk at 150 cm and the smaller disk at 50 cm were 30°, while that of the smaller disk at 150 cm was only 10°. Reflectors were tested one at a time, and each reference distance was tested separately. The results showed that experienced echolocators were able to determine reflector distances with high acuity. They were able to detect changes of 3 cm at the 50-cm reference distance, and of 7 cm at the 150-cm reference distance, regardless of reflector size. Regarding clicking behavior, participants utilized more clicks for the smaller disk at 150 cm compared to at 50 cm, more clicks for the smaller disk at 150 cm compared to the larger disk at 150 cm, and no difference in number of clicks between the smaller disk at 50 cm and the larger disk at 150 cm. Furthermore, the intensity of clicks increased as a function of reflector distance, but did not differ between different reflector sizes at the same distance. These findings show that experienced echolocators change the number and intensity of clicks in response to changes in the echo-acoustic strength of the presented reflectors. In light of data showing that even individuals newly trained in echolocation were better able to detect and avoid obstacles when they used echolocation [1,2], the findings from this study may guide the development of echolocation training for blind individuals without previous echolocation experience, and furthermore have the potential to drive the development and tuning of assisted echolocation devices [3]. References 1. Kolarik AA-O, Scarfe AC, Moore BC, Pardhan S. Blindness enhances auditory obstacle circumvention: Assessing echolocation, sensory substitution, and visual-based navigation. PLoS One. 2017;12(4). 2. Thaler L, Zhang X, Antoniou M, Kish DC, Cowie D. The flexible action system: Click-based echolocation may replace certain visual functionality for adaptive walking. J Exp Psychol Hum Percept Perform. 2020;46(1):21-35. 3. Sohl-Dickstein J, Teng S, Gaub BM, et al. A Device for Human Ultrasonic Echolocation. IEEE Trans Biomed Eng. 2015;62(6):1526-1534.

Reciprocal Matched Filtering in the Inner Ear of the African Clawed Frog (Xenopus Laevis)

Ariadna Cobo-Cuán and Peter M. Narins

Cobo-Cuan, A. and P.M. Narins, JARO (2020) 21: 33-42. DOI: 10.1007/s10162-019-00740-4

Reported By: Roberto E. Rodríguez-Morales, B.Sc., University of Puerto Rico

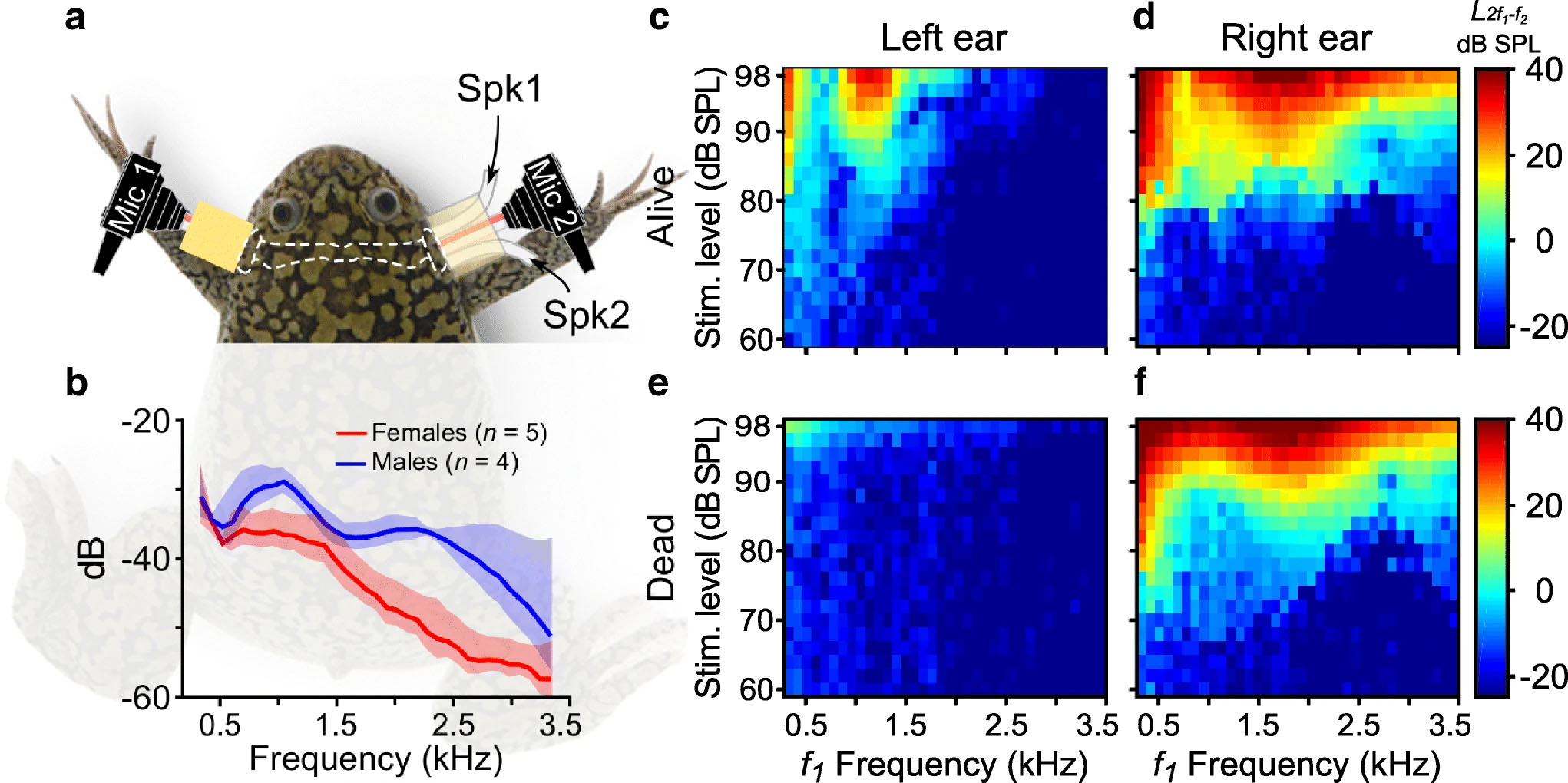

Acoustic transmission is the principal mediator of communication, and thus reproduction, in most anuran species. The African Clawed Frog is one of the few frog species where not only males, but also females vocalize during courtship. This study by Cobo-Cuán and Narins reports a complex and sex-specific sound-filtering system that is poised to optimize detection of the opposite sex’s call. This might provide an evolutionary advantage in habitats crowded with loud noises or competing sounds from other species (or the opposite sex of the same species). These researchers analyzed distortion product otoacoustic emissions (DPOAE) to measure inner ear sensitivity of the amphibian and basilar papillae of male and female African Clawed Frogs. The animals show clear structural adaptations that enable them to hear in water. However, they appear to exhibit lower hearing sensitivity in air than in water, which is a challenge when measuring DPOAE. This cannot be easily overcome with louder sound stimuli as they could generate synthetic distortions that cloud otoacoustic emissions. To solve this, they took advantage of a unique anatomical feature of this species of frog, which is that both middle ear cavities and larynx are connected through an air-filled space called the interaural recess. Hence, addition of a sound stimulation through the right ear while recording binaural DPOAEs can exclude any confounding system distortions generated by the left side (Fig. 1).  Fig. 1. Binaural DPOAE recording in Xenopus Laevis. a) Experimental set-up. Two microphones placed on each side (mic 1 = left and mic 2 = right). One speaker (Spk1) placed on the right ear. b) Sound pressure levels of monoaurally-presented stimuli are recorded by both DPOAE microphones and plotted in decibels (dB) relative to pressure level in the right ear. c-f) Color maps representing DPOAE responses and system generated distortions from one live and one dead frog, respectively. The data show that the frequency of highest amplitude from DPOAE recordings in females closely resembles the dominant emission frequencies in the male’s mating call. Males’ DPOAE amplitudes were highest at the dominant frequencies produced by the female’s call. These sexual differences coincide with previous anatomical data showing that male frogs have a larger tympanic disk, and a higher sensitivity to lower frequencies than females [1]. Males are 20-30% smaller than females, so the DPOAE sex differences might also correlate with the smaller interaural recess in males. Additionally, DPOAE generation is associated with stereociliary transduction from auditory hair cells. This suggests the auditory epithelium of the African Clawed Frogs could be where sexual dimorphism in hearing is created. The human ear is equipped to receive an ample repertoire of sound frequencies, filtering them out in higher processing centers of the brain. Some non-mammalian vertebrates do not have this luxury and may instead rely on a sound frequency pre-filtering mechanism to minimize post-processing in the central nervous system, as demonstrated by this study. References

Fig. 1. Binaural DPOAE recording in Xenopus Laevis. a) Experimental set-up. Two microphones placed on each side (mic 1 = left and mic 2 = right). One speaker (Spk1) placed on the right ear. b) Sound pressure levels of monoaurally-presented stimuli are recorded by both DPOAE microphones and plotted in decibels (dB) relative to pressure level in the right ear. c-f) Color maps representing DPOAE responses and system generated distortions from one live and one dead frog, respectively. The data show that the frequency of highest amplitude from DPOAE recordings in females closely resembles the dominant emission frequencies in the male’s mating call. Males’ DPOAE amplitudes were highest at the dominant frequencies produced by the female’s call. These sexual differences coincide with previous anatomical data showing that male frogs have a larger tympanic disk, and a higher sensitivity to lower frequencies than females [1]. Males are 20-30% smaller than females, so the DPOAE sex differences might also correlate with the smaller interaural recess in males. Additionally, DPOAE generation is associated with stereociliary transduction from auditory hair cells. This suggests the auditory epithelium of the African Clawed Frogs could be where sexual dimorphism in hearing is created. The human ear is equipped to receive an ample repertoire of sound frequencies, filtering them out in higher processing centers of the brain. Some non-mammalian vertebrates do not have this luxury and may instead rely on a sound frequency pre-filtering mechanism to minimize post-processing in the central nervous system, as demonstrated by this study. References

- Mason, M., M. Wang, and P. Narins, Structure and Function of the Middle Ear Apparatus of the Aquatic Frog, Xenopus Laevis. Proc Inst Acoust, 2009. 31: p. 13-21.

Morphological Immaturity of the Neonatal Organ of Corti and Associated Structures in Humans

Sebastiaan W.F. Meenderink, Chistopher A. Shera, Michelle D. Valero, M. Charles Liberman, & Carolina Abdala

Meenderink, S.W.F., et al., JARO (2019) 20:461–474. DOI: 10.1007/s10162-019-00734-2

Reported by: Murray J. Bartho, B.S., Stanford University School of Medicine, Department of Otolaryngology – Head & Neck Surgery

To date, the general scientific consensus has been that the cochlea and organ of Corti (OoC) are fully formed at birth. Confoundingly, newborns still exhibit functional immaturities, such as prolonged phase-gradient delays in distorted product otoacoustic emissions (DPOAE) tests. Meenderink and colleagues compare neonatal and adult temporal bones to see if these immaturities are caused by differences in cochlear geometry. This study uncovers important final cochlear maturation steps which are not well defined or understood, and encourages further study into inner ear micromechanics. Human cochleae reach adult size by fetal week 191 and numerous anatomical studies have shown that micromechanical elements of the cochlea are mostly complete by the 2nd trimester, with changes to the outer hair cells and their efferent processes finishing in the 3rd trimester.2 Neither known cochlear morphology nor sensory cell development explain the increased rate of change seen in neonate DPOAE phase-gradient delays in f2 frequencies below 1-2 kHz, nor is the delay is explained by middle ear mechanical immaturities.3 To investigate the possible cause of this delay, this paper examined 18 morphological features along the length of the neonatal and adult cochlea, including the OoC and associated structures. The authors found that adult cochlea varied significantly from their neonate counterparts in 16 of 18 morphometrics. As seen in Figure A, the newborn scala tympani and scala vestibuli were significantly larger in area compared to adult. Newborns also had a wider and thinner spiral lamina (SL) due to an elongated non-ossified “bridge” section and thin pars testa and pars pectinate sections. Adults have a larger OoC by area and maximal height, while the tunnel of Corti was wider and shorter in newborns compared with adults.  FIGURE A. The size and shape of cochlear scalae (SV = scala vestibuli; SM = scala media; ST = scala tympani) in adults (red) and newborns (cyan). The authors posit that newborn otoacoustic measurement aberrations are consistent with immature cochlear morphology. The basilar membrane (BM) and SL non-ossified bridge are wider in newborns than adults, and the neonate BM is thinner. These factors create a more compliant membrane in the neonate, which slows down cochlear transverse wave propagation, potentially causing the DPOAE phase-gradient delays seen in newborns. Taken as whole, this paper represents a comprehensive examination of the morphology of newborn and adult cochleae, and serves as a foundation for further exploration into newborn DPOAE phase-gradient delays, developmental cochlear micromechanics, and accurate cochlear modeling. References 1. Jeffery N, Spoor F (2004) Prenatal growth and development of the modern human labyrinth. J Anat 204:71–92 2. Ruben RJ (1967) Development of the inner of the mouse: a radioautographic study of terminal mitosis. Acta Otolaryngol Suppl 220:1–44 3. Abdala C, Dhar S, Kalluri R (2011A) Level dependence of distortion product otoacoustic emission phase isattributed to component mixing. J Acoust Soc Am 129:3123–3133. https://doi.org/ 10.1121/1.3573992

FIGURE A. The size and shape of cochlear scalae (SV = scala vestibuli; SM = scala media; ST = scala tympani) in adults (red) and newborns (cyan). The authors posit that newborn otoacoustic measurement aberrations are consistent with immature cochlear morphology. The basilar membrane (BM) and SL non-ossified bridge are wider in newborns than adults, and the neonate BM is thinner. These factors create a more compliant membrane in the neonate, which slows down cochlear transverse wave propagation, potentially causing the DPOAE phase-gradient delays seen in newborns. Taken as whole, this paper represents a comprehensive examination of the morphology of newborn and adult cochleae, and serves as a foundation for further exploration into newborn DPOAE phase-gradient delays, developmental cochlear micromechanics, and accurate cochlear modeling. References 1. Jeffery N, Spoor F (2004) Prenatal growth and development of the modern human labyrinth. J Anat 204:71–92 2. Ruben RJ (1967) Development of the inner of the mouse: a radioautographic study of terminal mitosis. Acta Otolaryngol Suppl 220:1–44 3. Abdala C, Dhar S, Kalluri R (2011A) Level dependence of distortion product otoacoustic emission phase isattributed to component mixing. J Acoust Soc Am 129:3123–3133. https://doi.org/ 10.1121/1.3573992

A Physiologically Inspired Model for Solving the Cocktail Party Problem

Chou, K.F., Dong J., Colburn, H.S., & Sen, K. JARO (2019) 20: 579-593 Reported By: Langchen Fan., Ph.D., University of Rochester, Department of Biomedical Engineering

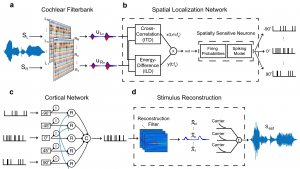

Human listeners with normal hearing can follow a specific sound source in a complex auditory scenario (i.e. cocktail party problem). This study proposed a model with a few processing stages mimicking the physiological characteristics to explain human performance (i.e. intelligibility) in this cocktail party problem. The model consisted of cochlear filterbank, spatial localization network, and cortical network (Figure A). The cochlear filterbank at each ear decomposed incoming sounds into different frequency components, served as the cochlea or auditory nerve in the auditory pathway. The spatial localization network, mimicking the midbrain, combined the classical binaural cues (the interaural time difference of the envelope and the interaural level difference) in each frequency channels. This network included model neurons with spatial tuning with preferred direction of 0° (front), ±45°, and ±90°. The model neurons of the cortical network received excitatory inputs from midbrain model neurons with the same preferred direction; cortical model neuron with non-0°-preferred direction also received inhibitory input from midbrain model neurons with 0°-preferred direction. The output spikes were reconstructed into waveforms with a set of trained reconstruction filters. Intelligibility was evaluated by the similarity between the reconstructed waveform and the target sentence. The model was examined mainly with two conditions: target sentence only, and target sentence with two maskers. For the target-alone condition, the target was highly intelligible for spatial locations from 0 to 90°. In the second condition, the target was located at 0°, and the two maskers were symmetrically located between 0 and ±90°. The intelligibility of the target sentence increased with more spatial separation, in contrast with decreased intelligibility of the maskers; the intelligibility plateaued at about 25°. These results were consistent with previous physiological results that cortical neurons can have broad spatial tuning to single targets as well as selective tuning to a target in a complex auditory scenerio1. The study also showed that a sentence is intelligible if the sentence comes from the preferred direction of the inhibitory input, suggesting a generalization of the findings to all directions. This model mimicked a few important physiological processing stages of incoming sounds and successfully explained a basic condition of the cocktail party problem. This model not only shows potential to explain more complex conditions, but also opens up the possibility of including top-down modulation.  Figure A (Figure 1 from the original paper). Illustration of the model structure. a) Cochlear filterbank: includes 36 independent filters at each ear to decompose the incoming sound, outputting narrowband waveforms. b) Spatial localization network: combines interaural time and level differences at each frequency channel; outputs spike trains based on the preferred direction of the model neurons. c) Cortical network: combines information from all preferred direction. d) Stimulus reconstruction: reconstructs processed waveform from spikes to estimate intelligibility. Reference: 1Narayan, R., Grana, G., & Sen, K. (2006). Distinct time scales in cortical discrimination of natural sounds in songbirds. Journal of neurophysiology, 96(1), 252-258.

Figure A (Figure 1 from the original paper). Illustration of the model structure. a) Cochlear filterbank: includes 36 independent filters at each ear to decompose the incoming sound, outputting narrowband waveforms. b) Spatial localization network: combines interaural time and level differences at each frequency channel; outputs spike trains based on the preferred direction of the model neurons. c) Cortical network: combines information from all preferred direction. d) Stimulus reconstruction: reconstructs processed waveform from spikes to estimate intelligibility. Reference: 1Narayan, R., Grana, G., & Sen, K. (2006). Distinct time scales in cortical discrimination of natural sounds in songbirds. Journal of neurophysiology, 96(1), 252-258.

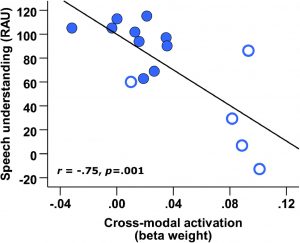

Deleting the HCN1 Subunit of Hyperpolarization-Activated Ion Channels in Mice Impairs Acoustic Startle Reflexes, Gap Detection, and Spatial Localization. James Ison, Paul Allen, and Donata Oertel Ison JR, Allen PD, & Oertel D. JARO (2017) 18(3):427. DOI: 10.1007/s10162-016-0610-8 Reported by: George Ordiway, B.S., Northwestern University, Department of Communication Sciences and Disorders. Ion channel expression and function is critical to all neural activity, especially time sensitive auditory processes like sound localization. While extensive molecular, cellular and systems research has demonstrated the importance of ion channels in auditory physiology, Ison et. al have identified behavioral consequences of a specific ion channel known as the hyperpolarization cyclic nucleotide gated (HCN) channel. HCN ion channels are expressed in many different brain systems – as well as – the heart. Recent in vitro studies of HCN channel function in the peripheral and central auditory system have examined neural excitability [1], gap detection [2], and spontaneous activity [3]. Of the four HCN channel subunits, HCN1 has the fastest kinetics. To address HCN1’s role in temporal precision, the authors used an HCN1-/- null mutant mouse to explore functionally relevant auditory tasks. In a series of experiments, the authors identify differences between HCN1+/+ and HCN1 -/- mice on a range of auditory and behavioral measures. HCN1 -/- mice had increased high frequency thresholds and wave II of the ABR was nearly absent. These findings suggest a lack of temporally synchronous firing at the level of the cochlear nucleus. To confirm the importance of HCN channels for sound localization, an acoustic startle protocol determined that HCN1-/- mice required a longer interstimulus interval (ISI) for the best temporal integration of sound signals. Prepulse inhibition (PPI) experiments revealed that short gap durations led to diminished PPI in HCN1-/- mice. When using ISI to observe the depression of the acoustic startle response, HCN1-/- mice showed significantly lower levels of depression which suggests deficient temporal precision. One significant finding involved a test of spatial localization (Fig A). When sounds were 45o separated in space, HCN1+/+ mice showed a maximal PPI at 30ms ISI; HCN1-/- mice showed diminished PPI across most ISIs. For 15, 30, and 45o of angular separation, HCN1-/- mice showed significantly less PPI, indicating failures to spatially separate sound stimuli. The “minimum audible angle” for angular separation in HCN1-/- mice was twice as high as HCN1+/+ mice. Overall, these experiments show a variety of behavioral, auditory, and even vestibular deficits in HCN1-/- mice. They not only support in vitro work demonstrating the importance of HCN channels, but also that the HCN1 channel subunit is critical for central auditory function and sound localization. HCN1-/- mice also presented with vestibular and muscular deficiencies. Such findings are encouraging for future study of auditory and vestibular interaction in peripheral and central systems. References

Figure A: Prepulse Inhibition (PPI) for different interstimulus intervals (ISI) and angular separation of sound. (a) PPI for 45o separation over a range of ISI. (b) Mean PPI across 30-60ms ISI (indicated by box in Fig7A) shown over a range of angular separations. HCN1+/+ mice are indicated by filled circles the solid line, HCN1-/- indicated by open circles and dashed line.

Figure A: Prepulse Inhibition (PPI) for different interstimulus intervals (ISI) and angular separation of sound. (a) PPI for 45o separation over a range of ISI. (b) Mean PPI across 30-60ms ISI (indicated by box in Fig7A) shown over a range of angular separations. HCN1+/+ mice are indicated by filled circles the solid line, HCN1-/- indicated by open circles and dashed line.

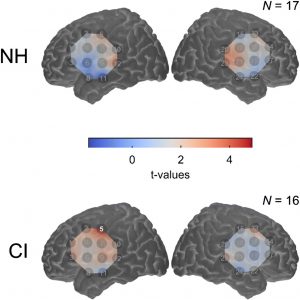

March 2020 Pre-operative Brain Imaging Using Functional Near-Infrared Spectroscopy Helps Predict Cochlear Implant Outcome in Deaf Adults Carly A. Anderson, Ian M. Wiggins, Padraig T. Kitterick, and Douglas E. H. Hartley Anderson, C.A., Wiggins, I.M., Kitterick, P.T., & Hartley, D.E. JARO (2019) 20: 511- 528 doi.org/10.1007/s10162-019-00729-z Reported by: Jacquelyn DeVries, B.S. & Christine Boone, M.D., Ph.D., University of California, San Diego School of Medicine Cochlear implant (CI) offers the hope of hearing restoration to deaf patients, but outcomes of the procedure are highly variable, and difficult to predict. This report shows that brain activity during a speechreading task may serve as a biomarker that is predictive of cochlear implant success. Currently, the best practice for prognostication is to consider hearing loss age-at-onset, duration of auditory deprivation, residual hearing, and hearing-aid use. However, these indicators account for only 22% of variability in CI outcome1. This study revealed superior temporal cortex activation (STC) as a promising potential biomarker to facilitate prediction of future speech comprehension in CI users. Deaf patients may experience cortical reorganization of sensory and speech processing associated with differences in speech comprehension. Deafness-induced cross-modal plasticity has been observed bilaterally within the posterior superior temporal cortex (bSTC), which is activated by auditory input in normal hearing individuals. Heightened visual speechreading skills have been associated with enhanced bSTC activation and faster neural processing of speech2. In this study, speechreading ability and cortical activation were measured in 17 adults with bilateral profound deafness (CI group) preoperatively and 17 normal hearing (NH) adults. Six months after CI implantation, auditory speech recognition was tested. Functional near-infrared spectroscopy (fNIRS), a neuroimaging modality based on hemodynamic responses, was used to assess neural activity. Preoperative bSTC activation in response to visual speech stimuli did not differ between CI and NH groups (Figure A). In the CI group, however, pre-implantation speechreading ability was positively associated with visual speech-related activation of bSTC and left STC. Cortical reorganization in this region may be associated with superior speechreading abilities observed in deaf individuals.  Figure A. (Image at Right) Map of pre-implant cortical activation for visual speech. As a group, the level of cross-modal activation was not significantly enhanced in deaf participants compared to normal hearing control participants. Pre-implantation bSTC activation to visual speech was predictive of worse auditory speech recognition 6 months after implantation (Figure B). This negative relationship was driven by pre- and peri-lingually deaf patients, but not post-lingually deaf patients. Regression analysis revealed bSTC activation from fNIRS data added significant incremental predictive value, independent of age-at-onset of deafness, duration of deafness, and speechreading ability covariates. Greater bSTC activation to visual speech before CI was not associated with reduced bSTC activation in response to auditory speech at 6 months after CI, suggesting that cross-modal activation and cortical reorganization in deafness did not hinder subsequent recruitment of activity in this region with auditory speech after CI.

Figure A. (Image at Right) Map of pre-implant cortical activation for visual speech. As a group, the level of cross-modal activation was not significantly enhanced in deaf participants compared to normal hearing control participants. Pre-implantation bSTC activation to visual speech was predictive of worse auditory speech recognition 6 months after implantation (Figure B). This negative relationship was driven by pre- and peri-lingually deaf patients, but not post-lingually deaf patients. Regression analysis revealed bSTC activation from fNIRS data added significant incremental predictive value, independent of age-at-onset of deafness, duration of deafness, and speechreading ability covariates. Greater bSTC activation to visual speech before CI was not associated with reduced bSTC activation in response to auditory speech at 6 months after CI, suggesting that cross-modal activation and cortical reorganization in deafness did not hinder subsequent recruitment of activity in this region with auditory speech after CI.  Figure B. (Image at Left). CI outcome at 6 months postoperatively is predicted by pre-implant cross-modal STC activation. Open markers represent data obtained from pre- and peri-lingually deaf CI users. Overall, this paper is a promising development towards a tool to help inform patients’ expectations and assist with decision-making in considering CI treatment. Further investigation could help determine the clinic utility of this potential biomarker and the nature of its relationship with pre- and post-lingual deafness. 1. Lazard DS, Vincent C, Venail F, et al. Pre-, per- and postoperative factors affecting performance of postlinguistically deaf adults using cochlear implants: a new conceptual model over time. PLoS One. 2012;7(11):e48739. 2. Suh MW, Lee HJ, Kim JS, Chung CK, Oh SH. Speech experience shapes the speechreading network and subsequent deafness facilitates it. Brain. 2009;132(Pt 10):2761-2771.

Figure B. (Image at Left). CI outcome at 6 months postoperatively is predicted by pre-implant cross-modal STC activation. Open markers represent data obtained from pre- and peri-lingually deaf CI users. Overall, this paper is a promising development towards a tool to help inform patients’ expectations and assist with decision-making in considering CI treatment. Further investigation could help determine the clinic utility of this potential biomarker and the nature of its relationship with pre- and post-lingual deafness. 1. Lazard DS, Vincent C, Venail F, et al. Pre-, per- and postoperative factors affecting performance of postlinguistically deaf adults using cochlear implants: a new conceptual model over time. PLoS One. 2012;7(11):e48739. 2. Suh MW, Lee HJ, Kim JS, Chung CK, Oh SH. Speech experience shapes the speechreading network and subsequent deafness facilitates it. Brain. 2009;132(Pt 10):2761-2771.

Leake PA, Rebscher SJ, Dore', Akil O. JARO (2019) volume 20: 341. DOI: 10.1007/s10162-019-00723-5 Reported by: Sumana Ghosh, Ph.D., University of Mississippi Medical Center, Department of Neurobiology and Anatomical Sciences Cochlear implant (CI) technology has revolutionized the treatment of sensorineural hearing loss (SNHL), but it depends on the health and survival of spiral ganglion neurons (SGNs) to ensure a maximal benefit for speech and language perception. To address this need, a new study by Leake and collaborators has focused on virus-based delivery of growth factors directly into the deafened cochlea in an animal model. Various neurotrophic factors (NFs) including brain-derived neurotrophic factor (BDNF), neurotrophin-3 (NT3) and glial cell-line derived neurotrophic factor (GDNF) are known to regulate the survival and maturation of neurons during development. A previous study by the same group showed that the administration of BDNF by osmotic pump accompanied by electrical stimulation improved neural survival in deafened cats, although it also induced ectopic sprouting of radial nerve fibers as a side-effect. These aberrant trajectories can muddle the transmission of tonotopic information and, in principle, affect speech-in-noise perception. Moreover, the osmotic pump suffers as a mode of drug delivery, since it presents a pathogen infection risk to the inner ear. AAV-Mediated Neurotrophin Gene Therapy Promotes Improved Survival of Cochlear Spiral Ganglion Neurons in Neonatally Deafened Cats: Comparison of AAV2-hBDNF and AAV5-hGDNF

Patricia A. Leake, Stephen J. Rebscher, Chantale Dore‘ & Omar Akil

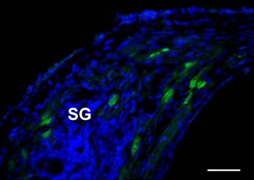

Figure A. GFP immunofluorescence in the SG 4 weeks after AAV-GFP transfection in the neonatally deafened cat. In this study, the authors investigated the efficacy of adeno-associated virus (AAV) - mediated NF gene delivery approach in mitigating SGN degeneration in juvenile cats who were first deafened before hearing onset by an ototoxic antibiotic. Two different serotypes of AAV vectors containing either hGDNF (serotype 5) or hBDNF (serotype 2), provided by uniQure Biopharma, Amsterdam, the Netherlands, were injected into the cochlea unilaterally while the contralateral ear served as control. Gene transduction efficacy was confirmed by transducing green fluorescent protein in a different group of “control” animals (Figure A). After three months, both the NFs significantly improved SGN survival. However, the two NFs varied in their effects on radial fiber behavior. AAV5-hGDNF showed ectopic radial fiber sprouting, while AAV2-hBDNF did not. Moreover, the protective effects of hBDNF were persistent over a long period (six months after transfection) compared to the progressive degeneration of SGNs on the control side (Figure B). hBDNF augmented the survival of radial fibers by two-fold compared to the untreated contralateral ears in which SGNs progressively degenerated. Maintenance of the auditory nerve is critical for successful outcome of both pediatric and elderly populations with auditory neuropathies. This study demonstrates that although AAV2 transfects only 5-10% of SGNs, this produces a physiological concentration of NF without undesired side effects. Importantly, the resulting neuro-protective effect can be achieved by only a single injection of virus, which is advantageous over osmotic pumps in a clinical setting.

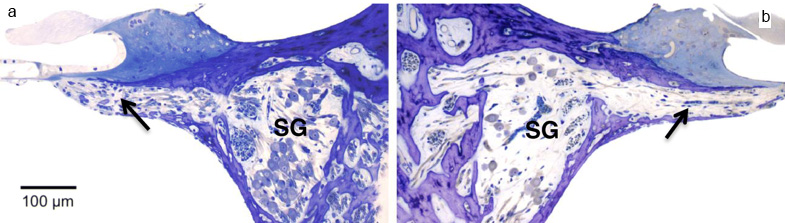

Figure A. GFP immunofluorescence in the SG 4 weeks after AAV-GFP transfection in the neonatally deafened cat. In this study, the authors investigated the efficacy of adeno-associated virus (AAV) - mediated NF gene delivery approach in mitigating SGN degeneration in juvenile cats who were first deafened before hearing onset by an ototoxic antibiotic. Two different serotypes of AAV vectors containing either hGDNF (serotype 5) or hBDNF (serotype 2), provided by uniQure Biopharma, Amsterdam, the Netherlands, were injected into the cochlea unilaterally while the contralateral ear served as control. Gene transduction efficacy was confirmed by transducing green fluorescent protein in a different group of “control” animals (Figure A). After three months, both the NFs significantly improved SGN survival. However, the two NFs varied in their effects on radial fiber behavior. AAV5-hGDNF showed ectopic radial fiber sprouting, while AAV2-hBDNF did not. Moreover, the protective effects of hBDNF were persistent over a long period (six months after transfection) compared to the progressive degeneration of SGNs on the control side (Figure B). hBDNF augmented the survival of radial fibers by two-fold compared to the untreated contralateral ears in which SGNs progressively degenerated. Maintenance of the auditory nerve is critical for successful outcome of both pediatric and elderly populations with auditory neuropathies. This study demonstrates that although AAV2 transfects only 5-10% of SGNs, this produces a physiological concentration of NF without undesired side effects. Importantly, the resulting neuro-protective effect can be achieved by only a single injection of virus, which is advantageous over osmotic pumps in a clinical setting.  Figure B. Staining of cochlear sections with toluidine blue and subsequent visualization by light microscopy demonstrated improved survival of the neural cells and the radial fibers (indicated by black arrow) in AAV2-hBDNF transfected ear (a) compared the contralateral ear (b) 26weeks post-injection.

Figure B. Staining of cochlear sections with toluidine blue and subsequent visualization by light microscopy demonstrated improved survival of the neural cells and the radial fibers (indicated by black arrow) in AAV2-hBDNF transfected ear (a) compared the contralateral ear (b) 26weeks post-injection.

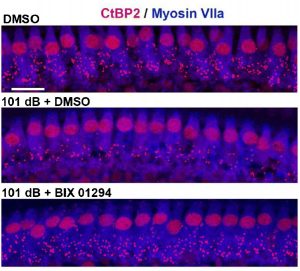

Inhibition of Histone Methyltransferase G9a Attenuates Noise-Induced Cochlear Synaptopathy And Hearing Loss Xiong, H., Long, H., Pan, S., Lai, R., Wang, X., Zhu, Y., Hill, K., Fang, Q., Zheng, Y., Sha,SH. JARO (2019) 20: 217–232 DOI: 10.1007/s10162-019-00714-6 Reported by: Niliksha Gunewardene, Ph.D., Bionics Institute, Australia Noise-induced hearing loss is one of the most common forms of sensorineural hearing loss in humans, and it has been on the rise for the last decade. This new study manipulates a biochemical pathway in noise-exposed mice that appears to protect the inner ear from some of this damage. In the recent publication, Xiong et al. propose a novel epigenetic mechanism for noise-induced hearing loss in mice. Epigenetic changes contribute to chromatin structural changes, whereby open versus closed chromatin states are associated with gene activation and repression, respectively. This study reports a role for the epigenetic enzyme G9a in mediating susceptibility of cochlear hair cells to noise damage. G9a is a histone lysine methyltransferase enzyme, that specifically methylates histone 3 of lysine 9 (H3K9me2), causing gene silencing. Here, the authors utilise a published moderate noise damage model in mice that causes permanent auditory threshold shifts, loss of ribbon synapses and outer hair cell loss particularly in the basal (high-frequency) region1. This manipulation activates G9a, causing an increase in H3K9me2 in cochlear cells, as evidenced by immunolabelling. Notably, treatment of mice prior to noise exposure using inhibitors of G9a (either a pharmaceutical or a small-interfering RNA) resulted in significant attenuation of noise-induced threshold shifts and protection of the outer hair cells and ribbon synapses, particularly in the basal regions (Figure A). To explore one mechanism underlying the protective effect of G9a, the authors focused on potassium voltage-gated channel subfamily Q member 4 (KCNQ4), expressed in sensory hair cells. They show that G9a inhibition in noise-damaged cochleae maintains the levels of KCNQ4 (which would otherwise be downregulated) and reduces the susceptibility of outer hair cells to noise-induced damage. Hao Xiong, Haishan Long, Song Pan, Ruosha Lai, Xianren Wang, Yuanping Zhu, Kayla Hill, Qiaojun Fang, Yiqing Zheng and Su-Hua Sha

Figure A: Inhibition of G9a prevents loss of synapses in the basal turn of the cochlea. Differences in the number of synaptic puncta (Ctpb2+) between normal (top), noise-exposed (middle), and noise-exposed treated with a pharmacological inhibitor of G9a (bottom). Scale bar = 10 μm. From Xiong et al., (2019). Treatments to protect or reverse hearing loss will benefit from a better understanding of the molecular mechanisms underlying cochlear damage. This study has effectively identified a potential mechanism that can be manipulated to protect cochlear hair cells and synapses from noise damage in mice. While it will be interesting to determine if G9a inhibition has an effect post-noise exposure, these findings indicate a role for epigenetic modifications in altering the susceptibility of cochlear cells to noise-induced damage. 1. Hill, K., Yuan, H., Wang, X., and Sha, S.H. (2016). Noise-Induced Loss of Hair Cells and Cochlear Synaptopathy Are Mediated by the Activation of AMPK. J Neurosci 36, 7497-7510.

Figure A: Inhibition of G9a prevents loss of synapses in the basal turn of the cochlea. Differences in the number of synaptic puncta (Ctpb2+) between normal (top), noise-exposed (middle), and noise-exposed treated with a pharmacological inhibitor of G9a (bottom). Scale bar = 10 μm. From Xiong et al., (2019). Treatments to protect or reverse hearing loss will benefit from a better understanding of the molecular mechanisms underlying cochlear damage. This study has effectively identified a potential mechanism that can be manipulated to protect cochlear hair cells and synapses from noise damage in mice. While it will be interesting to determine if G9a inhibition has an effect post-noise exposure, these findings indicate a role for epigenetic modifications in altering the susceptibility of cochlear cells to noise-induced damage. 1. Hill, K., Yuan, H., Wang, X., and Sha, S.H. (2016). Noise-Induced Loss of Hair Cells and Cochlear Synaptopathy Are Mediated by the Activation of AMPK. J Neurosci 36, 7497-7510.

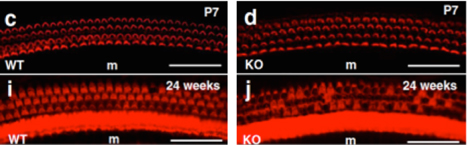

Huebner, A.K., Maler, H., Maul, A., Nietzsche, S., Herrmann, T., Praetorius, J., Hübner, C.A. JARO (2019) 20: 233-245. https://doi.org/10.1007/s10162-019-00719-1 Reported by: Arielle Hogan, B.S., University of Virginia School of Medicine, Department of Cell and Developmental Biology Progressive hearing loss is a common symptom that comes with age. In this publication, Huebner et al. investigate a possible key component to the mechanism of degenerative hearing loss—a sodium-coupled bicarbonate transporter found in gap junctions known as Scl4a10. They show that Slc4a10 plays a major role in maintenance of auditory sensory function through its regulation of endocochlear potential and hair cell morphology in the inner ear. The sensory phenomenon of hearing is a complex biological function. Key to its smooth operation is maintenance of concentration gradients and their resulting current potentials in the cochlea. The endocochlear potential (EP) is the current potential generated from the differential concentrations of potassium (K+) between the endolymph and perilymph, two fluids of the cochlear canal separated by the basilar membrane. The K+ gradient and EP are maintained by the cells of the stria vascularis that are connected to fibrocytes of the spiral ligament (SL) through gap junctions. After verifying Slc4a10’s expression in the SL fibrocytes, experiments measuring acid extrusion efficiency were conducted to see if Slc4a10 had a conserved function of acid extrusion in the inner ear as in other cell types. The findings, however, were normal in Slc4a10 knockout (KO) mice showing that there may be redundancy with other transporters to facilitate acid extrusion in the inner ear. Researchers then decided to test hearing ability by recording auditory-evoked brainstem responses. In Slc4a10 KO mice hearing threshold was increased and continued to increase with age providing evidence for Slc4a10’s implication in early-onset hearing loss. In order to determine the cause of the early-onset hearing loss in the Slc4a10 KO mice, given that acid extrusion in the mutant mice was normal, researchers decided to test for electrochemical and morphological disruptions in the cochlea. To do this, they measured the EP and stained tissue to visualize the morphology of cochlear hair cells. Heubner et al found the EP to be lowered and they found progressive loss of outer hair cells in Slc4a10 knockout mice showing that Scl4a10 plays a crucial role in maintenance of cochlear electrochemical and morphological integrity (Figure A). Early Hearing Loss upon Disruption of Slc4a10 in C57BL/6 Mice

Antje K. Huebner, Hannes Maier, Alena Maul, Sandor Nietzsche, Tanja Herrmann, Jeppe Praetorius, and Christian A. Hübner

Figure A: Immunofluorescent staining of hair cells of Organ of Corti show progressive degeneration of hair cells in Slc4a10 knockout mice compared to normal hair cell morphology in wild type mice. Together, these experiments showed that knockout of Slc4a10 in mice results in early-onset hearing loss due to a lowered EP and progressive loss of outer hair cells. These findings are significant because they elucidate another potential therapeutic target for regulation of EP and hair cell morphogenesis in deafness related diseases.

Figure A: Immunofluorescent staining of hair cells of Organ of Corti show progressive degeneration of hair cells in Slc4a10 knockout mice compared to normal hair cell morphology in wild type mice. Together, these experiments showed that knockout of Slc4a10 in mice results in early-onset hearing loss due to a lowered EP and progressive loss of outer hair cells. These findings are significant because they elucidate another potential therapeutic target for regulation of EP and hair cell morphogenesis in deafness related diseases.

Jahn, K.N. & Arenberg, J.G. JARO (2019) 20: 415-430. https://doi.org/10.1007/s10162-019-00718-2 Reported by: Agudemu Borjigin, M.E., Purdue University, Department of Biomedical Engineering This paper has important implications for evaluating the functional status of spiral ganglion neurons in cochlear implant (CI) listeners. Measuring and monitoring neural health in CI listeners is crucial not only for optimizing hearing status shortly after implantation, but also for evaluating the implant over time. While it has been difficult to assess the auditory nerve due to the lack of direct accessibility, modeling studies have suggested that differential responses to the mode of CI electrode stimulation (positive vs. negative discharge of ions) may provide a means to assess neural health. The current study tests this prediction. Biophysical modeling suggested that anodic stimulation, i.e. discharging positive ions into peripheral nerve, becomes more effective in poor neural health conditions, as compared to cathodic stimulation. The authors tested this so-called “polarity effect” and, more importantly, asked if this evaluation approach was independent of two factors known to be associated with electrode-neuron interface: electrode location and cochlear tissue resistance (CTR). Electrode location was estimated through composite tomography (CT) imaging for each subject (Figure A). The CTR was estimated using electrical field imaging to measure the voltage response to low-level current stimulations. Evaluating Psychophysical Polarity Sensitivity as an Indirect Estimate of Neural Status in Cochlear Implant Listeners

Kelly N. Jahn, and Julie G. Arenberg

Figure A. CT imaging reveals electrode location within the cochlea. The image from a patient CT scan (a) is mapped onto an imaging atlas (b) and eventually combined with scans from other levels to create a 3D reconstruction, where red dots represent CI electrodes (c). From: Jahn & Arenberg, JARO 20: 415-430. Results showed that the polarity effect was not related to electrode location or the CTR. Furthermore, the polarity effect explained a significant portion of the variations observed in a focused threshold measurement, which has been shown to reflect general cochlear status including local electrode position, the CTR, and neural integrity. Combined, the data support the hypothesis that the polarity effect can be used as an indicator of neural integrity in CI listeners, independent of electrode location and the CTR. The hypothesis was further supported by the correlation between the polarity effect and the duration of deafness, which is a common implicit assessment of neural integrity. This piece of evidence provides a way to evaluate neural contributions to the significant variations observed across CI listeners. This study has important clinical implications for individualizing CI programing for the best possible hearing outcome in patients. This study also paves a new way forward for clinical applications of new emerging research technologies, such as gene therapy for neural regeneration, where neural assessment is usually the first step.

Figure A. CT imaging reveals electrode location within the cochlea. The image from a patient CT scan (a) is mapped onto an imaging atlas (b) and eventually combined with scans from other levels to create a 3D reconstruction, where red dots represent CI electrodes (c). From: Jahn & Arenberg, JARO 20: 415-430. Results showed that the polarity effect was not related to electrode location or the CTR. Furthermore, the polarity effect explained a significant portion of the variations observed in a focused threshold measurement, which has been shown to reflect general cochlear status including local electrode position, the CTR, and neural integrity. Combined, the data support the hypothesis that the polarity effect can be used as an indicator of neural integrity in CI listeners, independent of electrode location and the CTR. The hypothesis was further supported by the correlation between the polarity effect and the duration of deafness, which is a common implicit assessment of neural integrity. This piece of evidence provides a way to evaluate neural contributions to the significant variations observed across CI listeners. This study has important clinical implications for individualizing CI programing for the best possible hearing outcome in patients. This study also paves a new way forward for clinical applications of new emerging research technologies, such as gene therapy for neural regeneration, where neural assessment is usually the first step.

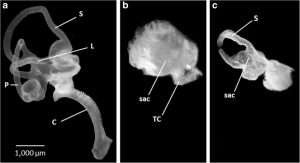

Lilian, S.J., Seal, H.E., Popratiloff, A., Hirsch, J.C. & Peusner, K.D. JARO (2019) 20: 133. https://doi.org/10.1007/s10162-018-00705-z Reported by: Lukas D. Landegger, M.D., Ph.D. Medical University of Vienna, Department of Otorhinolaryngology-Head and Neck Surgery Congenital vestibular disorders comprise a range of inner ear pathologies, hampered motor development, and balance and posture problems. Apart from mouse models of congenital vestibular disorders, until recently, no appropriate animal model had been established, which limited the study of central vestibular circuits. The rewiring of these neural connections has important ramifications for the understanding and potential subsequent therapy of these debilitating conditions. In their recent publication, Lilian et al. describe a chick embryo model allowing them to analyze the developing vestibular system in the above-mentioned disorders. By surgically rotating the otocyst (a structure that gives rise to the inner ear) of 2-day-old chick embryos (180° in the anterior-posterior axis), they form the “anterior-posterior axis rotated otocyst” or ARO chick (a tip of the hat to our scientific society, perhaps?). In this model, a reproducible pathology of a sac with truncated/missing semicircular canals can be induced (Figure A), which represents the most prevalent inner ear defect in pediatric patients with congenital vestibular disorders. A New Model for Congenital Vestibular Disorders

Sigmund J. Lilian, Hayley E. Seal, Anastas Popratiloff, June C. Hirsch, and Kenna D. Peusner

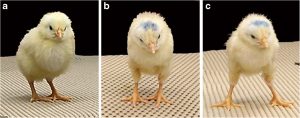

Figure A: Paint fills of inner ears on embryonic day 13 show differences between normal (a) and sac-like ARO chicks (b,c). From Lilian et al., 2019, JARO 20: 133-149 Meticulous analysis of the resulting anatomy in embryonic day 13 ARO chicks led the researchers to discover that the sac contains all five vestibular organs (maculae utriculi and sacculi as well as three cristae), but the macula utriculi and superior crista are shortened along the anterior-posterior axis. Additional changes are not just restricted to the inner ear but also include a synaptic station in the brainstem that receives vestibular input from inner ear: the tangential nucleus demonstrates a 66% reduction in the number of principal cells on the rotated side. These anatomical changes are accompanied by a subset of behavioral deficits: the ARO chicks have a constant right head tilt and gait problems (stumbling and walking with a widened base; Figure B), mimicking some human forms of congenital vestibular disorders. In contrast, the righting reflex times after hatching are unaffected, with no difference observed between control and ARO chicks. This novel technique appears to be relatively straightforward and gives researchers an important tool to assess the resulting changes in the central nervous system, which should hopefully help to develop new treatments for people affected by congenital vestibular disorders.

Figure A: Paint fills of inner ears on embryonic day 13 show differences between normal (a) and sac-like ARO chicks (b,c). From Lilian et al., 2019, JARO 20: 133-149 Meticulous analysis of the resulting anatomy in embryonic day 13 ARO chicks led the researchers to discover that the sac contains all five vestibular organs (maculae utriculi and sacculi as well as three cristae), but the macula utriculi and superior crista are shortened along the anterior-posterior axis. Additional changes are not just restricted to the inner ear but also include a synaptic station in the brainstem that receives vestibular input from inner ear: the tangential nucleus demonstrates a 66% reduction in the number of principal cells on the rotated side. These anatomical changes are accompanied by a subset of behavioral deficits: the ARO chicks have a constant right head tilt and gait problems (stumbling and walking with a widened base; Figure B), mimicking some human forms of congenital vestibular disorders. In contrast, the righting reflex times after hatching are unaffected, with no difference observed between control and ARO chicks. This novel technique appears to be relatively straightforward and gives researchers an important tool to assess the resulting changes in the central nervous system, which should hopefully help to develop new treatments for people affected by congenital vestibular disorders.  Figure B. 5-day hatchling chicks show differences between a normal animal (a) and an ARO chick with a head tilt at rest (b) and a widened stance after performing a righting reflex (c). From Lilian et al., 2019, JARO 20: 133-149.

Figure B. 5-day hatchling chicks show differences between a normal animal (a) and an ARO chick with a head tilt at rest (b) and a widened stance after performing a righting reflex (c). From Lilian et al., 2019, JARO 20: 133-149.

Luo, X., Soslowsky, S. & Pulling, K.R. JARO (2019) 20: 57. https://doi.org/10.1007/s10162-018-00701-3 Reported by: Karen Chan Barrett, Ph.D. & Nicole Jiam, M.D., University of California, San Francisco, Department of Otolaryngology-Head and Neck Surgery Cochlear implant (CI) users find it difficult to enjoy music, and a new paper by Luo et al. explores their struggles with perception of certain acoustic dimensions. Using an innovative approach, the authors studied the interaction between pitch and timbre perception, rather than treating these two features independently. Pitch is the perceptual correlate of fundamental frequency (or F0); timbre refers to the quality of a sound as distinct from pitch or intensity that helps to differentiate instruments or speakers from one another. In normal hearing (NH) adults, musical chords are perceived differently when played on different instruments1 and non-musician adults have a difficult time rapidly categorizing stimuli based on pitch or timbre, often confusing the two.2 In the current study, the authors designed two experiments to evaluate the relationship between pitch and timbre perception in non-musical NH listeners and CI users. Both designs revealed better performance when the two acoustic dimensions were varied congruently (as expected for human musical predilections) rather than incongruently, despite an overall worse performance by CI users. In experiment 1, participants completed tasks to determine fundamental frequency (F0) and spectral slope (timbre correlate) difference limens (DLs) without variations in the non-target dimension. Pitch and sharpness rankings were then separately tested when the F0 and the spectral slope of harmonic complex tones varied by the same multiple of individual DLs either congruently (e.g. higher pitch accompanied with a sharper timbre or a lower pitch with a duller timbre) or incongruently (e.g. higher pitch with a duller timbre). In general, CI users had poorer timbre and pitch perception compared to NH adults. Additionally, a symmetric and bidirectional interaction between pitch and timbre perception was found in that better performance was seen for congruent F0 and spectral slope variations. In experiment 2, CI users performed melodic contour identification (MCI) of harmonic complex tones with or without spectral slope variations. All participants repeated pitch and timbre discrimination tasks to obtain F0 and spectral slope limens, and then completed the MCI task where the contours either had no spectral slope variations, congruent variations (i.e. spectral slope and F0 increased together or decreased together), or incongruent variations (i.e. spectral slope and F0 moved in opposite directions). Results again demonstrated an interaction between pitch and timbre; better performance was found for congruent stimuli. Additionally, MCI performance was significantly degraded with amplitude roving, suggesting that there may also be a perceptual interaction between loudness and pitch cues. In summary, this study is notable in that it explores how acoustic dimensions interact and are perceived by CI users, a crucial step towards a deeper understanding of complex sound perception in implantees. These findings are significant because they may have implications for future methods to improve music enjoyment in CI users. 1. Beal AL. The skill of recognizing musical structures. Mem Cognit. 1985 Sep;13(5):405–12. 2. Pitt MA. Perception of pitch and timbre by musically trained and untrained listeners. J Exp Psychol Hum Percept Perform. 1994 Oct; 20(5):976–86. Interaction Between Pitch and Timbre Perception in Normal-Hearing Listeners and Cochlear Implant Users

Xin Luo, Samara Soslowsky, and Kathryn R.Pulling